- Generating a Client from OpenAPI Spec

- Automating Account and User Tokens

- Managing Participant Query Store

- Associate Daml users with users in your identity provider

- Using Services

- Write a Python Automation

- Write a JVM Automation

- Manage Ledger Access on Daml Hub

- App UI

- Ledger Initialization With Daml Script

Generating a Client from OpenAPI Spec

While examples in this guide are provided using cURL, you can use any HTTP client generated from the openapi spec in your preferred language of choice to interact with the Daml Hub APIs.

For example, you can use the openapi-generator-cli to generate clients in many languages, such as Java, Python, Scala or Go from the the Daml Hub OpenAPI specifications for Site API and Application API.

Hub Site API OpenAPI Spec : Generate a Java Client

# An example for generating a Java client from the Daml Hub Site API spec:

openapi-generator-cli generate \

-i https://hub.daml.com/openapi-docs/hub-site-api.json \

-g java \

-o /tmp/hub-site-api-java-clientHub Application API OpenAPI Spec : Generate a Java Client

# An example for generating a Java client from the Daml Hub Site API spec:

openapi-generator-cli generate \

-i https://hub.daml.com/openapi-docs/hub-app-api.json \

-g java \

-o /tmp/hub-app-api-java-client📘 Note: The processes in this guide were tested with GNU coreutils base64; Mac's default base64 implementation is known to produce different results under some circumstances

Automate Account and User Tokens

Authentication in Daml Hub: Types of tokens

Daml Hub uses three kinds of tokens :

- Account Token - preferred mechanism for managing PACs and multiple ledgers with the Daml Hub Site API

- User Token - preferred mechanism for automation when interacting with the Daml Hub Application API

- Ledger Party Token - less-preferred (but still supported) mechanism for automation when interacting with Daml Hub Application API

This guide focuses on the steps required to mint account tokens to work with the Daml Hub Site API and user tokens to work with the Daml Hub Application API. NOTE: User tokens are preferred over ledger party tokens to use with the Daml Hub Application API.

User tokens

Use a Personal Access Credential via Account Settings UI | API Spec to manage and mint account and user tokens, as described below.

Capture your account token from the ledger UI

Account tokens in Daml Hub are the preferred way to authenticate when managing your ledger or service via the Daml Hub Site APIs. You can capture the token in the UI, then automate the creation of personal access credentials (PACs) that can be used to mint short-lived account tokens for that ledger/service.

Capture your account token from the ledger UI

To manage all your ledgers on Hub, you should create a PAC which can mint your account token.

For the initial setup of this PAC, Copy Account JWT from the Account Settings - Profile Page.

Then set this token to the variable ACCOUNT_TOKEN

export ACCOUNT_TOKEN="<token copied from Copy Account JWT>"Automate the creation of account and user tokens in Daml Hub using a Super PAC

Create a Super PAC

Create a Personal Access Credential via cURL

Create a new credential without a scope specified (Take Daml Hub Site Actions or Access User's Data from the UI) to create a PAC which can mint account and user tokens.

secret_expires_at should be specified in seconds since epoch and token_expires_in should be specified in seconds.

In the example below we create a PAC valid for 24hrs which mints tokens that are valid for 1hr

curl 'https://hub.daml.com/api/v1/personal-access-credentials' \

-H 'authorization: Bearer '"$ACCOUNT_TOKEN"'' \

-H 'content-type: application/json' \

--data-raw '{"name":"AccountAndUserPAC","secret_expires_at":'"$(date -d "+24 hours" +%s)"',"token_expires_in":'"$((1*60*60))"'}'Response

Capture the returned pac_id and pac_secret and store it securely. pac_secret should be treated as a private key, as it can as it can subsequently be used to mint account token and user tokens for users on this ledger.

{

"id_issued_at": 1716295061,

"name": "AccountAndUserPAC",

"pac_id": "apikey-12345678-1234-1234-1234-123456789abc",

"pac_secret": "xyaxyaxxaxxxaxaxxa",

"secret_expires_at": 1716381381,

"token_expires_in": 3600

}Mint an account token from your Super PAC

Use the PAC to generate an account token

#base64 encode the pac_id and pac_secret

encoded_pac_id_secret=$(echo -n ${pac_id}:${pac_secret} | base64 -w 0)

curl -XPOST https://hub.daml.com/api/v1/personal-access-credentials/token \

-H 'Authorization: Basic '"${encoded_pac_id_secret}"'' \

-H 'content-type: application/json' \

--data-raw '{"scope": "site"}'Response

Capture the returned access token and pass this as the Authorization Bearer token on subsequent Site API requests.

{

"access_token": "eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsImtpZCI6ImRhYmwtY...",

"expires_in": 3600,

"token_type": "Bearer"

}Minting user token from your Super PAC

With the full support of the administrative User Management and Party Management services on Hub, you can now manage your parties and users either via the Hub Console, gRPC API, or HTTP JSON API.

The user token is also preferred for all automation for applications that interact with the Application API. A user token corresponds to a specific user on the ledger, which is granted specific actAs and readAs rights. Note that the 'user' in user token refers only to the users created on the ledger, and is distinct from the Daml Hub end user or the end user of any app you may create.

A user token should be used in place of a multi-party ledger token when a user needs to readAs and/or actAs multiple parties.

Identify the ledger and ledger owner user

To create and use user tokens on a given ledger, you must first identify the ledger and ledger owner user, you then use the PAC to mint a user token. You can also use cURL to list all existing PACs, or to revoke an existing PAC.

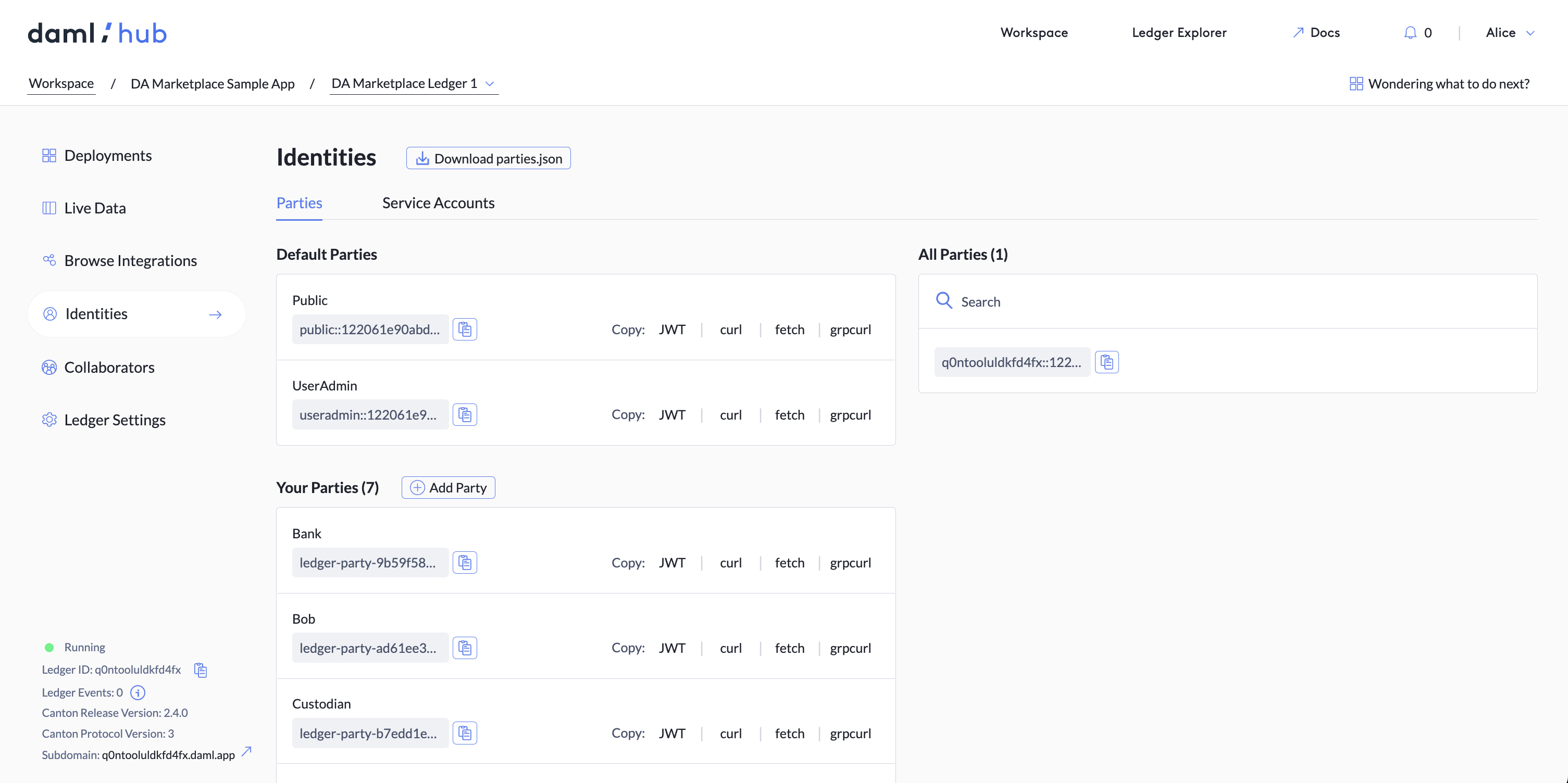

- Create a new ledger/participant/scratchpad on Hub and identify the

<ledgerId>or<serviceId>once created - Go to the Identities tab for the ledger and find the user ID of the ledger owner (typically begins with

auth0,google-oauth2, orgoogle-apps). Alternatively, you can curl the account endpoint,

curl -sH 'authorization: Bearer '"$ACCOUNT_TOKEN"'' https://hub.daml.com/api/v1/account/userMint a user token from your Super PAC

Now that you have identified the ledger and user ID of the ledger owner, you are ready to mint a token for this user as required for your automations.

curl -XPOST https://hub.daml.com/api/v1/personal-access-credentials/token \

-H 'Authorization: Basic '"${encoded_pac_id_secret}"'' \

-H 'content-type: application/json' \

--data-raw '{"ledger": { "ledgerId": "abc123defghi546", "user": "yourUser"},"scope": "ledger:data"}'Response

Capture the returned access token and pass this as the Authorization Bearer token on subsequent Application API requests.

{

"access_token": "jeJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsImtpZCI6ImRhYmwtY...",

"expires_in": 86400,

"token_type": "Bearer"

}Create a PAC able to mint only an account token in Daml Hub

Create a Site-scoped PAC

Create a Personal Access Credential via cURL

You can also create a PAC which can only be used to mint account tokens.

The general instructions are the same as those detailed above for a SuperPAC, but instead create a new credential with scope:site to create a PAC which can mint only account tokens.

curl 'https://hub.daml.com/api/v1/personal-access-credentials' \

-H 'authorization: Bearer '"$ACCOUNT_TOKEN"'' \

-H 'content-type: application/json' \

--data-raw '{"name":"AccountTokenPAC","scope":"site","secret_expires_at":'"$(date -d "+24 hours" +%s)"',"token_expires_in":'"$((24*60*60))"'}'Response

Capture the returned pac_secret and store this securely. This should be treated as a private key, as this is the secret which can subsequently be used to mint user tokens for this user on this ledger.

{

"id_issued_at": 1699923029,

"name": "AccountToken",

"pac_id": "<pac_id>",

"pac_secret": "<pac_secret>",

"scope": "site",

"secret_expires_at": 1707699015,

"token_expires_in": 86400

}Mint an account token from your PAC

Use the PAC to generate an account token

#base64 encode the pac_id and pac_secret

encoded_pac_id_secret=$(echo -n ${pac_id}:${pac_secret} | base64 -w 0)

curl -XPOST https://hub.daml.com/api/v1/personal-access-credentials/token \

-H 'Authorization: Basic '"${encoded_pac_id_secret}"''Response

Capture the returned pac_secret and store this securely. This should be treated as a private key, as this is the secret which can subsequently be used to mint user tokens for this user on this ledger.

{

"access_token": "eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsImtpZCI6ImRhYmwtY...",

"expires_in": 86400,

"token_type": "Bearer"

}Create a PAC able to mint only user tokens in Daml Hub

You can also create a PAC which can only be used to mint a user token on a particular ledger or service.

Identify the ledger and ledger owner user

- Create a new ledger on Hub and identify the

<ledgerId>once created - Go to the Identities tab for the ledger and find the user ID of the ledger owner (typically begins with

auth0,google-oauth2, orgoogle-apps). Alternatively, you can curl the account endpoint,curl -H 'authorization: Bearer '"$ACCOUNT_TOKEN"'' https://hub.daml.com/api/v1/account/user.

Create a PAC to mint a user token

Now that you have identified the ledger and user ID of the ledger owner, you are ready to create a PAC so you can mint tokens for this user as required for your automations.

Personal Access Credentials can either be created through the Hub UI or via cURL commands as detailed below.

curl 'https://hub.daml.com/api/v1/personal-access-credentials' \

-H 'Authorization: Bearer '"${ACCOUNT_TOKEN}"'' \

-H 'Content-Type: application/json' \

--data-raw '{"ledger":{"ledgerId":"abcd123456","user":"someUser"},"name":"user","scope":"ledger:data","secret_expires_at":1716910484}'Mint a user token for your ledger owner user

#base64 encode the pac_id and pac_secret

encoded_pac_id_secret=$(echo -n ${pac_id}:${pac_secret} | base64 -w 0)

curl -XPOST https://hub.daml.com/api/v1/personal-access-credentials/token \

-H 'Authorization: Basic '"${encoded_pac_id_secret}"''Managing Participant Query Store

The Participant Query is automatically created whenever you start a Scratchpad or Participant service.

You can view logs, manage status and pause/resume/delete the Participant Query Store from the Hub Console. It is also possible to take the pause / resume / delete actions programatically, if you want to delete the default configuration and create a new PQS instance with your preferred settings.

The PQS section Site API documentation provides the details of the API endpoints available to manage the Participant Query Store.

Recreate your Participant Query Store with new configuration

A definition of the different streaming and offset options for PQS can be found in the console guide.

# Delete the existing instance

curl --location 'http://hub.daml.com/api/v1/services/{serviceId}/pqs/delete' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer '"$ACCOUNT_TOKEN"''

# Create a new instance with your TransactionStream and Latest configuration

curl --location 'http://hub.daml.com/api/v1/services/{serviceId}/pqs' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer '"$ACCOUNT_TOKEN"'' \

--data '{

"datasource": "TransactionStream",

"ledgerStart": "Latest"

}'Change the configuration of your Participant Query Store

# Update configuration of an existing instance

curl --request PATCH --location 'http://hub.daml.com/api/v1/services/{serviceId}/pqs' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer '"$ACCOUNT_TOKEN"'' \

--data '{

"datasource": "TransactionStream",

"ledgerStart": "Latest"

}'Pause a PQS instance

❯ curl --location 'http://hub.daml.com/api/v1/services/{serviceId}/pqs/pause' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer '"$ACCOUNT_TOKEN"''Get details of currently running PQS instance

❯ curl --location 'http://hub.daml.com/api/v1/services/{serviceId}/pqs' \

--header 'Authorization: Bearer '"$ACCOUNT_TOKEN"''Associate Daml users with users in your identity provider

This section describes how to manage access to the application from your existing identity and access management (IAM) product. You can:

- allocate parties

- create an end user with ActAs rights

- mint user tokens for the newly-created user and use them to proxy requests for that user

Party allocation

You can allocate a party using the gRPC API or the JSON API.

Allocate a party using the gRPC API (grpcurl)

Allocate Party gRPC Specification

grpcurl -H "Authorization: Bearer $LEDGER_OWNER_TOKEN" -d @ "$SUB_DOMAIN_HOST:443" \

com.daml.ledger.api.v1.admin.PartyManagementService/AllocateParty <<EOM

{

"partyIdHint":"myexampleparty1",

"displayName":"My Example Party 1",

}

EOMAllocate a party using the JSON API (curl)

curl --fail-with-body --silent --show-error -XPOST \

'https://'"$SUB_DOMAIN_HOST"'/v1/parties/allocate' \

-H 'accept: application/json' \

-H 'content-type: application/json' \

-H 'authorization: Bearer '"$LEDGER_OWNER_TOKEN"'' \

-d '{ "identifierHint" : "myexampleparty1", "displayName": "My Example Party 1" }'To parse the unique identifier which is returned, pipe the response to jq and parse similar to the code below

jq -r '( first(.[]) | .identifier )Create an end user with actAs rights to an example party

As with party allocation, this can be accomplished using the gRPC API or the JSON API.

Note: If you’re syncing with users in an external identity provider, the UserID created on Hub should ideally match the subject (primary identity) of the user in your identity provider to avoid additional mapping. In the example below we assume the external identity of the user is external-idp|MyUser1

Let us assume the party created in the allocate step just before this is called myexampleparty1::12207b85d70d88941ab1af9608aaa1c8710ceaec7b3246a192ecbc38918f6d413b06

Create user using the gRPC API (grpcurl)

gRPC Specifications | JSON API

grpcurl -H "Authorization: Bearer $LEDGER_OWNER_TOKEN" -d @ "$SUB_DOMAIN_HOST:443" \

com.daml.ledger.api.v1.admin.UserManagementService/CreateUser <<EOM

{

"user": {

"id" : "external-idp|MyUser1",

"primaryParty" : "myexampleparty1::12207b85d70d88941ab1af9608aaa1c8710ceaec7b3246a192ecbc38918f6d413b06",

}

"rights": [

{

"party": "myexampleparty1::12207b85d70d88941ab1af9608aaa1c8710ceaec7b3246a192ecbc38918f6d413b06",

"type": "CanActAs"

},

]

}

EOMCreate user using the JSON API (curl)

curl --fail-with-body --silent --show-error -XPOST \

'https://'"$SUB_DOMAIN_HOST"'/v1/user/create' \

-H 'accept: application/json' \

-H 'content-type: application/json' \

-H 'authorization: Bearer '"$LEDGER_OWNER_TOKEN"'' \

-d '{

"userId": "external-idp|MyUser1"

"primaryParty": "myexampleparty1::12207b85d70d88941ab1af9608aaa1c8710ceaec7b3246a192ecbc38918f6d413b06",

"rights": [

{

"party": "myexampleparty1::12207b85d70d88941ab1af9608aaa1c8710ceaec7b3246a192ecbc38918f6d413b06",

"type": "CanActAs"

},

]

}'Mint a user token on behalf of an end user

Create a PAC for the user you have just created for ledger xyzabcdefghi

curl -XPOST 'https://hub.daml.com/api/v1/personal-access-credentials' \

-H 'authorization: Bearer '"$ACCOUNT_TOKEN"'' \

--data-raw '

{

"ledger": {

"ledgerId": "xyzabcdefghi",

"user": "external-idp|MyUser1"

},

"name": "User PAC for user external-idp|MyUser1 on ledger xyzabcdefghi",

"scope": "ledger:data",

"secret_expires_at": 1,

"token_expires_in": 3600

}'Response

Capture the returned pac_secret and pac_id and store this securely in a place where it can be associated with only this user, and retrieved for subsequent token refresh requests. The pac_secret should be treated as a private key, as this is the secret which can subsequently be used to mint user tokens for this user on this ledger.

{

"id_issued_at": 1699919904,

"name": "User PAC for user external-idp|MyUser1 on ledger xyzabcdefghi",

"pac_id": "<pac_id>",

"pac_secret": "<pac_secret>",

"scope": "ledger",

"secret_expires_at": 1700524685,

"token_expires_in": 3600

}Use a token to proxy requests for the end user

Getting the token for an end user allows you to proxy requests on their behalf. These are the steps to follow behind the scenes when a user logs in to the end application

- Determine the identity of the end user you want to proxy requests as (either from an external identity provider or from a system lookup)

- Create a User PAC for that specific user as above, and store it permanently as a sensitive credential as per your firm’s security policy associated with this end user

- Using the specific User PAC for the end user, mint a token for that user and return it

encoded_pac_id_secret=$(echo -n ${pac_id}:${pac_secret} | base64 -w 0)

curl -XPOST https://hub.daml.com/api/v1/personal-access-credentials/token \

-H 'Authorization: Basic '"${encoded_pac_id_secret}"''- Issue streaming or create contract request to ledger as end user passing that minted token

Move from multi-party token to user token for multi-party workflow

The example details the creation and usage of a user with actAs rights to multiple parties.

- Create a party for the primary account holder (PrimaryParty), and capture the unique ID in variable

party1

grpcurl -H "Authorization: Bearer $LEDGER_OWNER_TOKEN" -d @ "$SUB_DOMAIN_HOST:443" \

com.daml.ledger.api.v1.admin.PartyManagementService/AllocateParty <<EOM

{

"partyIdHint":"primaryparty",

"displayName":"Primary Party",

}

EOM- Create a party for the secondary account holder (secondaryParty), and capture the ID in variable

party2

grpcurl -H "Authorization: Bearer $LEDGER_OWNER_TOKEN" -d @ "$SUB_DOMAIN_HOST:443" \

com.daml.ledger.api.v1.admin.PartyManagementService/AllocateParty <<EOM

{

"partyIdHint":"secondaryParty",

"displayName":"Secondary Party",

}

EOM- Create an AccountHolder user through the admin API with actAs rights to both parties

grpcurl -H "Authorization: Bearer $LEDGER_OWNER_TOKEN" -d @ "$SUB_DOMAIN_HOST:443" \

com.daml.ledger.api.v1.admin.UserManagementService/CreateUser <<EOM

{

"user": {

"id" : "AccountHolder",

}

"rights": [

{

"party": "$party1",

"type": "CanActAs"

},

{

"party": "$party2",

"type": "CanActAs"

},

]

}

EOM- Create a PAC for the PrimaryAccountHolder user on ledger xyzabcdefghi, and store the returned

pac_secretandpac_idsecurely.

curl -XPOST 'https://hub.daml.com/api/v1/personal-access-credentials' \

-H 'authorization: Bearer '"$ACCOUNT_TOKEN"'' \

--data-raw '

{

"ledger": {

"ledgerId": "xyzabcdefghi",

"user": "AccountHolder"

},

"name": "User PAC for user AccountHolder on ledger xyzabcdefghi",

"scope": "ledger:data",

"secret_expires_at": 1,

"token_expires_in": 3600

}'Response

Capture the returned pac_secret and pac_id and store this securely in a place where it can be associated with only this user, and retrieved for subsequent token refresh requests. The pac_secret should be treated as a private key, as this is the secret which can subsequently be used to mint user tokens for this user on this ledger.

{

"id_issued_at": 1699919904,

"name": "User PAC for user AccountHolder on ledger xyzabcdefghi",

"pac_id": "<pac_id>",

"pac_secret": "<pac_secret>",

"scope": "ledger",

"secret_expires_at": 1700524685,

"token_expires_in": 3600

}- Use the PAC to create a token for the PrimaryAccountHolder user, and capture in variable

user_token

encoded_pac_id_secret=$(echo -n ${pac_id}:${pac_secret} | base64 -w 0)

curl -XPOST https://hub.daml.com/api/v1/personal-access-credentials/token \

-H 'Authorization: Basic '"${encoded_pac_id_secret}"''- Issue a transaction subscription specifying the user token filtering for both primaryParty and secondaryParty

grpcurl -H "Authorization: Bearer $user_token" \

-d '{

"filter": {

"filtersByParty": {

"$party1": {},

"$party2": {}

}

},

"ledgerId": "xyzabcdefghi"

}' $SUB_DOMAIN_HOST:443 com.daml.ledger.api.v1.TransactionService.GetTransactionsUsing Services

Introduction

This section describes how to use the new Daml Hub APIs to automate the deployment of participants and synchronizers.

It covers how to:

- create a synchronizer and a participant

- check their statuses

- connect the participant to the synchronizer

- upload and deploy a DAR

- list users and mint a user token

- exercise a contract on that DAR

A client generated from the Open API specifications is the recommended mechanism to interact with these APIs. You can also experiment with them by installing a utility like Postman and uploading the OpenAPI specs.

• See Generating a Client from OpenAPI Spec_

• Daml Hub OpenAPI specifications can be found at Site API and Application API.

Token required for Site API calls

See section Automate the creation of account and user tokens in Daml Hub using a Super PAC for how to automate the creation of an Account Token.

For the first manual run-through, copy the account token from the UI and set it to the environment variable.

-

To make these curl requests, you need an auth token. To get one:

-

go to Account Profile,

-

navigate to your account settings page

-

click "copy account JWT."

-

in the terminal, set

ACCOUNT_TOKEN=<token_copied_from_account_settings_page>. -

-

Create a connected Synchronizer and Participant

You can create a synchronizer and a participant that will be created and automatically connected to each other once up and running.

curl --location 'https://hub.hub.daml.com/api/v1/services/network'' \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN" \

--data '{"participants":[{"name":"partNetApp","size":"small"}],"synchronizerName":"syncNetApp"}'Create and pair a synchronizer and a participant

You can also independently create a synchronizer and participant, and manually them to each other.

Create a synchronizer

curl --location 'https://hub.daml.com/api/v1/services' \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN" \

--data '{

"name": "asdfasdf",

"serviceType": "synchronizer"

}'# response

{"serviceId": "t32po9l8dgmu3gypzph9", "synchronizerId": "hub_domain_protocol_5::12208f599610fcf04c59ee54df23b9f12654bc896d31f5231f977871f28b47694741", "hostname": "t32po9l8dgmu3gypzph9.connectplus.daml-dev.app:443", "asOf": "2024-01-03T11:53:16.792189Z"}Create a participant

A participant may take between 2-5min to start up. You can check the status of your participant and synchronizer with their respective status endpoints.

ParticipantCreateResults=$(curl --location 'https://hub.daml.com/api/v1/services' \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN" \

--data '{

"name": "myparticipant",

"serviceType": "participant",

"size": "small"

}')# response

{"serviceId": "nxe5f4302fm9rl3humgh", "participantId": "PAR::nxe5f4302fm9rl3humgh::1220349ce017d2a00e58cd09c8320a6483945fe2ba0326251b5b92e871c85e3b0b97", "asOf": "2024-01-03T11:53:56.430407Z"}Check the participant status

curl --location "https://hub.daml.com/api/v1/services/$(echo $ParticipantCreateResults | jq -r '.serviceId')/participant/status" \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN"`

# response

{

"active": true,

"connectedDomains": [],

"participantId": "PAR::nxe5f4302fm9rl3humgh::122063cf362a85f18d93a6f964b2c4c919af2b6092d55f5a696c0f35fc8179ab076d",

"uptime": "48m 6.001003s"

}Check the domain status

curl --location "https://hub.daml.com/api/v1/services/$(echo $SynchronizerCreateResults | jq -r '.serviceId')/synchronizer/status" \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN"

```

```shell

#response

{"active":true,"connectedParticipants":[],"synchronizerId":"hub_domain_protocol_5::12208f599610fcf04c59ee54df23b9f12654bc896d31f5231f977871f28b47694741","uptime":"23m 50.108971s"}```Once the participant and the synchronizer are both up and healthy, you can join them together.

Join a synchronizer and a participant

- Joining a synchronizer and a participant requires two steps:

- set participant permissions on the synchronizer

- tell the participant to join the synchronizer

Set participant permissions on the synchronizer

curl --location "https://hub.daml.com/api/v1/services/$(echo $SynchronizerCreateResults | jq -r '.serviceId')/participants" \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN" \

--data "{

\"permissionLevel\": \"Submission\",

\"participantId\": \"$(echo $ParticipantCreateResults | jq -r '.participantId')\"

}"

# response is empty 200Tell the participant to join the synchronizer

curl --location 'https://hub.daml.com/api/v1/services/{serviceId}/connect' \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN" \

--data '{

"name": "mysynchronizer",

"host": "https://{serviceId}.daml.app:443"

}'- Now you can check the participant status and see that it has connected successfully:

curl --location 'https://hub.daml.com/api/v1/services/{serviceId}/participant/status' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN"# response

{

"active": true,

"connectedDomains": [

{

"connected": true,

"domainId": "hub_domain_protocol_5::12205eacc50413adaa5cac0e76136c522baa9264ff791181b92e7ab22e758b8e960a"

}

],

"participantId": "PAR::ztc23bqgoogyiultto33::1220259eb7bb087b87288db064ca90b02707514363ea2c19dc9a0accd69732824f1a",

"uptime": "57m 18.411786s"

}Likewise, check the domain status:

curl --location 'https://hub.daml.com/api/v1/services/mynr3opxt7ntnw6tlog7/synchronizer/status' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN"# Response

{

"active": true,

"connectedParticipants": [

{

"participantId": "PAR::{serviceId}::122063cf362a85f18d93a6f964b2c4c919af2b6092d55f5a696c0f35fc8179ab076d"

}

],

"synchronizerId": "hub_domain_protocol_5::12203396066b54fa24d0ea8213a0a499522f2ea695e8d280bb40fe945cd534de288c",

"uptime": "1h 17m 44.528644s"

}- They are now connected. Next, you can upload a dar and exercise a contract.

Upload and deploy a DAR

- To get a DAR on your ledger, first build a DAR for your Daml application. For this example to work you'll need to use the DAR from Hub Automation Example.

Upload the DAR

- This uploads the file as

example.dar.

curl --location 'https://hub.daml.com/api/v1/artifacts/files/example.dar' \

--header 'Content-Type: application/octet-stream' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN" \

--data '@{path_to_dar}/example-model-0.1.0.dar'{

# lots of stuff here ...

"artifactHash": "02b030796ace9bc734e95bcb9878a121a6121d321912f03a1c99c45902111507"

}Deploy dar to participant

- Next, deploy it to your participant using the provided artifact hash:

curl --location 'https://hub.daml.com/api/v1/services/{serviceId}/artifacts' \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN" \

--data '{

"artifactHash": "02b030796ace9bc734e95bcb9878a121a6121d321912f03a1c99c45902111507"

}'#response

{

"asOf": "2023-12-15T18:29:17.176600Z"

}Deploy automation

curl --location 'https://hub.daml.com/api/v1/artifacts/files/chat-automation.tar.gz' \

--header 'Content-Type: application/octet-stream' \

--header 'Accept: application/json' \

--header "Authorization: $ACCOUNT_TOKEN" \

--data '@{path_to_automation}/daml_chat-4.0.0.tar.gz'# response

{

"artifactContent": {

"entityInfo": {

"apiVersion": "dabl.com/v1",

"artifactHash": "b3eedc13ca03cbf340f7fa109e20a63c6c6505fbc6d93e77822db6fcaa34f151",

"iconArtifactHash": null,

"entity": {

"tag": "Automation",

"value": {

"tag": "LedgerBot",

"value": {

"entityName": "daml_chat-4.0.0.tar.gz",

"runtime": "python-file",

"metadata": {}

}

}

}

},

"createdAt": "2023-12-15T15:13:04.571521Z",

"updatedAt": "2023-12-15T15:13:04.571521Z"

},

"artifactHash": "b3eedc13ca03cbf340f7fa109e20a63c6c6505fbc6d93e77822db6fcaa34f151"

}User tokens and executing a contract

- The steps for generating a user token are:

- obtain a user ID

- create a Personal Access Credential (PAC)

- use your PAC against the token minting endpoint to get a token These steps are outlined in the Automate User Tokens section of the docs.

Find your user ID

curl --location 'https://hub.daml.com/api/v1/services/{serviceId}/user' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $ACCOUNT_TOKEN"# response

{

"result": {

"userId": "auth0|1232323278789987897",

"primaryParty": "ledger-party-6c8a103c-0a66-4535-b795-230a568e49d3::122063cf362a85f18d93a6f964b2c4c919af2b6092d55f5a696c0f35fc8179ab076d"

},

"status": 200

}Request a PAC for this user ID

Refer to section Minting user token from your Super PAC for how to mint a user token.

- To list the available parties, use the token you received back to call an app-level endpoint:

curl --location "https://{serviceId}.daml.app/v1/parties" \

--header 'Accept: application/json' \

--header "Authorization: Bearer $USER_TOKEN"# response

{

"result": [

{

"identifier": "y8wmvsic3qxxcprux2c0::1220bfdcc7333ade46c8d608c63e79e88e08daa8b826238928a6480c66d555d57a13",

"isLocal": true

},

{

"identifier": "1220e6098a204ba83b698b403f773686b021405efd3c605bf17b6519d9a2f38df098::1220e6098a204ba83b698b403f773686b021405efd3c605bf17b6519d9a2f38df098",

"isLocal": false

},

{

"displayName": "Public",

"identifier": "public::1220bfdcc7333ade46c8d608c63e79e88e08daa8b826238928a6480c66d555d57a13",

"isLocal": true

},

{

"displayName": "UserAdmin",

"identifier": "useradmin::1220bfdcc7333ade46c8d608c63e79e88e08daa8b826238928a6480c66d555d57a13",

"isLocal": true

},

{

"displayName": "Samwise Gangi",

"identifier": "ledger-party-acbcc19d-4e42-4847-b676-ee66cd3f8ae0::1220bfdcc7333ade46c8d608c63e79e88e08daa8b826238928a6480c66d555d57a13",

"isLocal": true

}

],

"status": 200

}Write a Python Automation

This guide contains a simple example of a Python automation that can be run in Daml Hub. This example can be copied and then adjusted to your application.

The code for this example can be found in digital-asset/hub-automation-example/python. Note that the Daml model in example-model is for reference and does not need to be copied when using the code as a template.

Please bear in mind that this code is provided for illustrative purposes only, and as such may not be production quality and/or may not fit your use cases.

Build the automation

Run make all in the project's root directory. This command uses poetry to build and package a .tar.gz file with the automation. It then copies the file with the correct version and name (as set in the Makefile) into the root directory. The .tar.gz file should then be uploaded to Daml Hub to run the automation.

Run the automation locally

Run DAML_LEDGER_PARTY="party::1234" poetry run python3 -m bot

Since localhost:6865 is set as a default, you do not need to set the ledger URL. However, DAML_LEDGER_PARTY must be set to a party that is allocated on the Daml ledger you are testing with - which is always slightly different on Canton ledgers due to the participant ID. run_local.sh can be used to dynamically fetch the Alice party and start the automation with that party.

Structure the automation

A Hub Python automation should always be a module named bot as it is run on Hub with python3 -m bot.

pyproject.toml

The configuration file for the project that the poetry tool uses to build the automation:

[tool.poetry]

name = "bot"

version = "0.1.0"

description = "Example of a Daml Hub Python Automation"

authors = ["Digital Asset"]

[tool.poetry.dependencies]

python = "^3.9"

dazl = "^7.3.1"Directory structure

├── bot

│ ├── __init__.py

│ └── pythonbot_example.py

├── poetry.lock

├── pyproject.tomlinit.py

The file with the main portion of the code (in pythonbot_example.py) can have any name, but must be imported in __init__.py where the main function should be called. This file runs when the automation is initialized:

from .pythonbot_example import main

from asyncio import run

run(main())Automation Code

Python automations running in Daml Hub generally use the Dazl library to react to incoming Daml contracts.

Package IDs

Dazl recognizes template names in the format package_id:ModuleName.NestedModule:TemplateName:

package_id="d36d2d419030b7c335eeeb138fa43520a81a56326e4755083ba671c1a2063e76"

# Define the names of our templates for later reuse

class Templates:

User = f"{package_id}:User:User"

Alias = f"{package_id}:User:Alias"

Notification = f"{package_id}:User:Notification"The package ID is the unique identifier of the .dar of the contracts to follow. Including the package ID ensures that the automation only reacts to templates from the specified Daml model. If the package ID is not included, Dazl streams all templates that have the same name. This is particularly important when a new version of a Daml model is uploaded to the ledger, since the names of the templates may remain the same.

The package ID of a .dar can be found by running daml damlc -- inspect /path/to/dar | grep "package"

Environment variables

Dazl requires the URL of the Daml ledger to connect to as well as a party to act as. These are always set as environment variables in automations running in Daml Hub, but adding defaults can help with running locally.

# The URL path to the ledger you would like to connect to

url = os.getenv('DAML_LEDGER_URL') or "localhost:6865"

# The party that is running the automation.

party = os.getenv('DAML_LEDGER_PARTY') or "party"DAML_LEDGER_PARTY is set as the party that you specified when deploying the automation. Note that this party can only see and operate on contracts that it has access to as a signatory or observer.

Stream

After defining the templates, the example bot in this repository sets up a stream that runs indefinitely. This stream sends a log message when a contract is created or deleted, or when the stream has reached the current state of the ledger. If a Notification contract is created, it automatically exercises the Acknowledge choice:

# Start up a dazl connection

async with connect(url=url, act_as=Party(party)) as conn:

# Stream all of our templates forever

async with conn.stream_many([Templates.User, Templates.Alias, Templates.Notification]) as stream:

async for event in stream.items():

if isinstance(event, CreateEvent):

logging.info(f"Noticed a {event.contract_id.value_type} contract: {event.payload}")

if str(event.contract_id.value_type) == Templates.Notification:

await conn.exercise(event.contract_id, "Acknowledge", {})

elif isinstance(event, ArchiveEvent):

logging.info(f"Noticed that a {event.contract_id.value_type} contract was deleted")

elif isinstance(event, Boundary):

logging.info(f"Up to date on the current state of the ledger at offset: {event.offset}")stream.items() yields a CreateEvent when a contract is created, an ArchiveEvent when a contract is archived, and a Boundary event once the stream has caught up to the current end of the ledger.

The Boundary can be helpful when starting a stream on a ledger that already has data. The boundary event has an offset parameter that can be passed to conn.stream_many, after which the stream begins from the offset point.

conn.exercise is used in this example, but create_and_exercise, exercise_by_key and create commands are also available.

Query

Dazl has another command, query/query_many which continues the program once the query is finished instead of continuing to stream. Commands can also be defined and later submitted together with other commands as a single transaction. The following example queries for all current Notification templates, then submits all Acknowledge commands together: # Query only Notification contracts and build a list of "Acknowledge" commands

commands = []

async with conn.query(Templates.Notification) as stream:

async for event in stream.creates():

commands.append(ExerciseCommand(event.contract_id, "Acknowledge", {}))

# Submit all commands together after the query completes

await conn.submit(commands)External Connectivity

Users can connect Python automations on their ledgers to services running on the internet outside of Daml Hub. The outgoing IP address is dynamically set. For incoming connections, Daml Hub provides a webhook URL: http://{ledgerId}.daml.app/pythonbot/{instanceId}/. This link can be copied from the Status page for the running instance. To accept traffic to that endpoint, you can run a webserver (such as with aiohttp) on the default 0.0.0.0:8080. A request pointed directly to the webhook URL is routed to the root directory of your server.

Write a JVM Automation

This guide outlines how to create a JVM automation that can be run in Daml Hub. JVM automations can be written in any language that can be run on the JVM (most typically, Java and Scala).

Note that JVM automations are only supported for the (Scratchpad service)[/docs/quickstart/console#scratchpad-service] and the (Participant service)[/docs/quickstart/console#participant-service].

The code for this example can be found in the open-source Github repo digital-asset/hub-automation-example/java. The example can be copied and adjusted to your application.

Note that the Daml model in example-model is for reference and does not need to be copied when using the code as a template. The Daml model in example-model has its templates generated into the source code via daml codegen - you can either build a model from this example or replace the

codegen and model with your own.

For more examples of how to use the Daml Java Bindings, please refer to https://github.com/digital-asset/ex-java-bindings.

Please bear in mind that this code is provided for illustrative purposes only, and as such may not be production quality and/or may not fit your use cases.

Build the automation

The hub-automation-example repository has a simple skeleton example of a JVM automation that can be run in Daml Hub.

To build

Pre-requisites • Maven • JDK 11 or higher (JDK 17 preferred)

If you have checked out the full example, you can build everything with the following command:

make allRunning this command uses maven to build and package a 'fat' .jar file with the automation. It then copies the file with the correct version and name (as set in the Makefile) into the root directory. The .jar file is what will be uploaded to Daml Hub to run the automation.

To run locally

# start up a local sandbox

daml sandbox

# start up the jvm automation

./run_local.shStructure

pom.xml

Directory structure

├── Makefile

├── README.md

├── pom.xml

├── run_local.sh

└── src

└── main

├── java

│ └── examples

│ └── automation

│ ├── Main.java

│ ├── Processor.java

│ └── codegen

│ ├── da

│ │ ├── internal

│ │ │ └── template

│ │ │ └── Archive.java

│ │ └── types

│ │ └── Tuple2.java

│ └── user

│ ├── Acknowledge.java

│ ├── Alias.java

│ ├── Change.java

│ ├── Follow.java

│ ├── Notification.java

│ └── User.java

└── resources

└── logback.xmlmain

public class Main {

public static void main(String[] args) {

String appId = System.getenv("DAML_APPLICATION_ID");

String ledgerId = System.getenv("DAML_LEDGER_ID");

String userId = System.getenv("DAML_USER_ID");

String[] url = System.getenv("DAML_LEDGER_URL").split(":");

String host = url[0];

int port = Integer.parseInt(url[1]);

}

}Configuration

You can configure an automation that may differ from deployment to deployment by uploading a configuration file when the automation is deployed.

This configuration can be of any type, for example .json, .toml, or .yaml.

To access this configuration file, set the environment variable CONFIG_FILE to point to a location on the volume where the configuration file is stored. This file can then be parsed by the automation.

For example if a JSON file was uploaded:

package examples.javabot;

import org.json.JSONException;

import org.json.JSONObject;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

import java.util.Optional;

public class ConfigurationParser {

private static final Logger logger = LoggerFactory.getLogger(ConfigurationParser.class.getName());

static void parseConfigFile() {

try {

Optional<String> configFilePath = Optional.ofNullable(System.getenv("CONFIG_FILE"));

if (configFilePath.isPresent()) {

String configContent = Files.readString(Paths.get(configFilePath.get()));

if (!configContent.isBlank()) {

logger.info(configContent);

JSONObject config = new JSONObject(configContent);

logger.info("configFilePath: " + configFilePath);

logger.info("configFileContents" + config.toString(4));

} else {

throw new IOException("No config file content found");

}

}

else {

throw new IOException("No config file content found");

}

} catch (IOException | JSONException e) {

// Catch any file read or JSON parsing errors in case the argument JSON file wasn't uploaded.

// Since this is just an example we don't need to worry about that currently.

logger.warn(e.toString(), e);

}

}

}To run on Daml Hub

Determine what user and party you want the automation to run as.

Create a new party from the Identities / Party tab and give it a meaningful display name and a hint of your choice. Create a new user from the Identities / User tab and populate the chosen party as the primaryParty for that user.

Once you have built the JAR file that contains all dependencies, start a new service on Daml Hub (a scratchpad or a participant connected to a synchronizer), go to the Deployments tab, and upload the JAR.

Once the JAR is uploaded, you can start a new instance of the automation and run it as the newly created user.

Select the configuration desired for that deployment following the instructions in the previous section.

External Connectivity

Outbound Connectivity

JVM automations running on participants can connect to services running on the internet outside of Daml Hub. The outgoing IP address is dynamically set.

Inbound Connectivity

For incoming connections, Daml Hub provides a webhook URL ofhttp://{ledgerId}.daml.app/automations/{instanceId}/. This link can be copied from the Status Page for the running instance.

If you would like to accept traffic to that endpoint, you can run a webserver running on the default 0.0.0.0:8080. A request pointed directly to the webhook URL will be routed to the root / of your server.

Reading from the Ledger

JVM automations running on Daml Hub generally use the Daml Java Bindings to react to incoming Daml contracts

Package IDs

The package ID is the unique identifier of the .dar of the contracts to follow. Including the package ID ensures that the automation only reacts to templates from the specified Daml model. If the package ID is not included, the grpc will stream all templates that have the same name. This is particularly important when a new version of a Daml model is uploaded to the participant, since the names of the templates may remain the same.

The package ID of a .dar can be found by running daml damlc -- inspect /path/to/dar | grep "package"

Stream

After defining the templates, the example automation in this repository sets up a stream that runs indefinitely. This stream sends a log message when a contract is created or deleted, or when the stream has reached the current state of the participant. If a Notification contract is created, it automatically exercises the Acknowledge choice:

public void runIndefinitely() {

final var inclusiveFilter = InclusiveFilter

.ofTemplateIds(Set.of(requestIdentifier));

// specify inclusive filter for the party attached to this processor

final var getTransactionsRequest = getGetTransactionsRequest(inclusiveFilter);

// this StreamObserver reacts to transactions and prints a message if an error occurs or the stream gets closed

StreamObserver<GetTransactionsResponse> transactionObserver = new StreamObserver<>() {

@Override

public void onNext(GetTransactionsResponse value) {

value.getTransactionsList().forEach(Processor.this::processTransaction);

}

@Override

public void onError(Throwable t) {

logger.error("{} encountered an error while processing transactions : {}", party, t.toString(), t );

System.exit(0);

}

@Override

public void onCompleted() {

logger.info("{} transactions stream completed", party);

}

};

logger.info("{} starts reading transactions", party);

transactionService.getTransactions(getTransactionsRequest.toProto(), transactionObserver);

}Querying Ledger

After defining the templates, the example automation can also query the participant for all current Notification templates and then submit all Acknowledge commands together:

Reading from the Participant Query Store (PQS)

A JVM automation can also query the PQS Postgres database if you want to take an action based on a particular state of the ledger.

PQS queries are also the recommended way of reading data if you need to query for archived historical information, rather than relying on the stream of gRPC transactions.

Connecting to Participant Query Store (PQS)

The full JDBC URL connection to the Postgres database is made available to your running JVM automation as the environment variable PQS_JDBC_URL

You can read from this environment variable and then create a Postgres database connection using your JDBC driver of choice.

The example below is given for representational purposes

import org.postgresql.ds.PGSimpleDataSource;

String jdbcUrl = System.getenv("PQS_JDBC_URL");

PqsJdbcConnection pqsJdbcConnection = new PqsJdbcConnection(jdbcUrl);

public class PqsJdbcConnection {

private final PGSimpleDataSource dataSource;

public PqsJdbcConnection(String jdbcUrl) throws ClassNotFoundException {

Class.forName("org.postgresql.Driver");

this.dataSource = new PGSimpleDataSource();

dataSource.setUrl(jdbcUrl);

}

}Creating Custom Indexes and Performance Tuning the Participant Query Store (PQS)

This section briefly discusses optimizing the PQS database to make the most of the capabilities of PostgreSQL. The topic is broad, and there are many resources available. Refer to the PostgreSQL documentation for more information, particularly the docs on JSON indexing.

PQS makes extensive use of JSONB columns to store ledger data. Familiarity with JSONB is essential to optimize queries. The following sections provide some tips to help you get started.

Indexes are an important tool for improving the performance of queries with JSONB content. Users are expected to create JSONB-based indexes to optimize their model-specific queries, where additional read efficiency justifies the inevitable write-amplification. Simple indexes can be created using the following helper function. More sophisticated indexes can be created using the standard PostgreSQL syntax.

To tune and optimize queries on your PQS instance, start by using Postgres' analysis tools to identify execution times and determine which queries are the most expensive.

After analyzing, if you find that your queries would benefit from custom indexes, you can create custom indexes on your Hub-hosted PQS instance.

To create an index, embed the index creation code in your Java automation. Run a dedicated Java automation specifically for creating the index, separate from your regular automation.

When deploying the automation code that creates the index onto Daml Hub, select pqsindexowner as the PQS Postgres User to grant elevated rights for creating indexes.

An example index query creation is shown below. You can find a fully working Java automations that create indexes in the hub-automation-example

Create Index Example java code

public JSONArray createIndex(Identifier template) throws SQLException {

List<String> contractsList = new ArrayList<String>();

String createIndexStatement = createIndexQuery("example_model_request_id",

"example-model:" + makeTemplateName(template),

"((payload->>''party'')::text)",

"btree");

int s = callableStatement(createIndexStatement);

contractsList.add(s, toString());

return new JSONArray(contractsList);

}Further guidance on how to index the PQS is detailed in the PQS User Guide indexing section.

Redacting from the Participant Query Store (PQS)

For removing sensitive or personally identifiable information from contracts and exercises within the PQS database, you can redact archived contracts.

Guidance on redaction is detailed in the PQS User Guide redaction section.

While deploying automation code that performs the redaction onto Daml Hub, ensure you select pqsredactor as the PQS Postgres User to have the necessary rights for performing the redaction.

Example java code:

public JSONArray redactByContractId(String contractId, Identifier template) throws SQLException {

String redactionId = UUID.randomUUID().toString();

List<String> contractsList = new ArrayList<String>();

String redactionStoredProc = String.format("select redact_contract('%s', '%s') from archives('%s')", contractId, redactionId, makeTemplateName(template));

List<Map<String, Object>> list = runQuery(redactionStoredProc);

list.forEach(contracts -> contracts.forEach((y, payload) -> contractsList.add(payload.toString())));

return new JSONArray(contractsList);

}Querying Participant Query Store (PQS)

Guidance on how to query the PQS is detailed in the Participant Query Store (PQS) documentation.

Example JVM Automation Code Querying PQS

Example JVM automation code for querying the ODS, creating indexes, and redacting contracts is available in the java folder within hub-automation-example

Managing Ledger Access on Daml Hub

This guide outlines how organizations can manage employees' access to ledgers on Daml Hub and explains how to control access to production ledgers.

Managing Ledger Access

Account holders in Daml Hub can add ledger collaborators. Ledger collaborators can develop on and operate a ledger from their own Daml Hub accounts. Ledger collaborators can do nearly everything a ledger owner can do, but they cannot delete the ledger, change ledger capacity, add/change subdomains, or add/remove other collaborators. Ledger collaborators must be Daml Hub users. By adding collaborators to ledgers, users can manage ledger access for employees inside their organization and third parties outside their organization. Documentation for the collaborators feature.

Control Access to Production Ledgers

Each Daml Hub account has one primary account holder. The primary account holder can create high capacity ledgers for the organization. Customers should go into production on high capacity ledgers. Each organization has a quota of 3 high capacity ledgers for production, staging, and QA or development. More information about ledger capacities and quotas can be found in the Daml Hub documentation. To control access to high capacity and production ledgers we advise that the primary account holder is assigned to an email address owned or operated by a trusted party within the organization. The primary account holder can then control access to the production and high capacity ledgers by adding or removing ledger collaborators.

App UI

The App UI feature of Daml Hub allows you to provide a frontend for your app by publishing files to be exposed by HTTPS over a ledger-specific subdomain. These files are associated with the underlying ledger, and any access to that ledger by a UI hosted in Daml Hub is authenticated and authorized through Daml Hub itself.

UI assets must be uploaded to Daml Hub and deployed as .zip files. The .zip should contain a single root directory

containing an index.html along with the rest of the resources for your UI. As an example,

for DABL Chat, the .zip file contains a top-level build/ directory with

all the assets for the UI. If there's anything outside that top-level directory or the build/index.html file is

missing, the Daml Hub console signals an error when you attempt to upload the UI .zip into the workspace collections.

For limits on file size for .zip, see file size limit above. (If you're building with npm, there

is no need to include node_modules or the UI sources. The UI .zip file only includes the resources needed for the

build output.)

$ unzip -l dablchat-ui-0.2.0.zip

Archive: dablchat-ui-0.2.0.zip

Length Date Time Name

--------- ---------- ----- ----

0 10-23-2020 10:56 build/

457 10-23-2020 10:56 build/favicon.ico

2275 10-23-2020 10:56 build/index.html

1290 10-23-2020 10:56 build/precache-manifest.4b024a2a5462fd8f8b4f3a3c11220b3e.js

12933 10-23-2020 10:56 build/logo512.png

1568 10-23-2020 10:56 build/asset-manifest.json

0 10-23-2020 10:56 build/static/

0 10-23-2020 10:56 build/static/css/

19361 10-23-2020 10:56 build/static/css/main.4e40915c.chunk.css.map

... elided ...

496 10-23-2020 10:56 build/manifest.json

1181 10-23-2020 10:56 build/service-worker.js

57 10-23-2020 10:56 build/robots.txt

4452 10-23-2020 10:56 build/logo192.png

--------- -------

3825920 30 files

When your UI Asset is deployed to its associated ledger, you can publish it to a unique subdomain.

Ledger Initialization with Daml Script

Daml Script is a development tool with a simple API that runs against an actual ledger. Using it with Daml Hub achieves automated and accurate ledger bootstrapping, enabling you to skip manual onboarding and other preliminary logic to initialize the state of your ledger.

Migrate Scenarios to Daml Script

The simplest way to write Daml Script is to use pre-existing scenarios and make simple changes to them. The Daml docs have a migration guide that explains this simple translation. The Daml docs also have a guide for writing Daml Script from scratch, which is very similar to writing scenarios.

Changing the scenario code of your .dar ultimately changes its hash. To avoid this, create a directory containing the

project with your Daml models and create a new project in that directory containing a single file for your Daml Script.

Now, copy your scenario code into the single file of your script project. Convert it to Daml Script and import the

contracts used in your models, as well as Daml.Script.

In the case of onboarding ledger parties, your Daml Script should include a record containing the ledger parties in your workflow. This record is passed in as the argument for your script. Below is an example of a script that takes parties as input:

module Setup where

import Actors.Intern

import Actors.Analyst

import Actors.Associate

import Actors.VicePresident

import Actors.SeniorVP

import Actors.ManagingDirector

import Daml.Script

data LedgerParties = LedgerParties with

intern : Party

analyst : Party

associate : Party

vicePresident : Party

seniorVP : Party

managingDirector: Party

deriving (Eq, Show)

initialize : LedgerParties -> Script ()

initialize parties = do

let intern = parties.intern

analyst = parties.analyst

associate = parties.associate

vicePresident = parties.vicePresident

seniorVP = parties.seniorVP

managingDirector = parties.managingDirector

-- continue with the rest of your script's logic...Configure Your Build With Daml Script

In the daml.yaml file of your script project, add daml-script to the list of dependencies, the ledger parties that

you specified as arguments to your script, and under data-dependencies add the name of the corresponding .dar

containing your models. You can exclude this line if you are not using a separate project for your script.

sdk-version: 1.3.0

name: bootstrap-script1

source: daml

init-script: Setup:initialize

parties:

- Intern

- Analyst

- Associate

- VicePresident

- SeniorVP

- ManagingDirector

version: 0.0.1

dependencies:

- daml-prim

- daml-stdlib

- daml-script

data-dependencies:

- ../bootstrap/.daml/dist/bootstrap-0.0.1.dar

sandbox-options:

- --wall-clock-timeDeploy Your .dar to a Daml Hub Ledger

In the terminal, execute the command daml build in your script project. Then, upload the .dar containing your models

to an empty ledger in Daml Hub.

For limits on file size for .dar, see file size limit above.

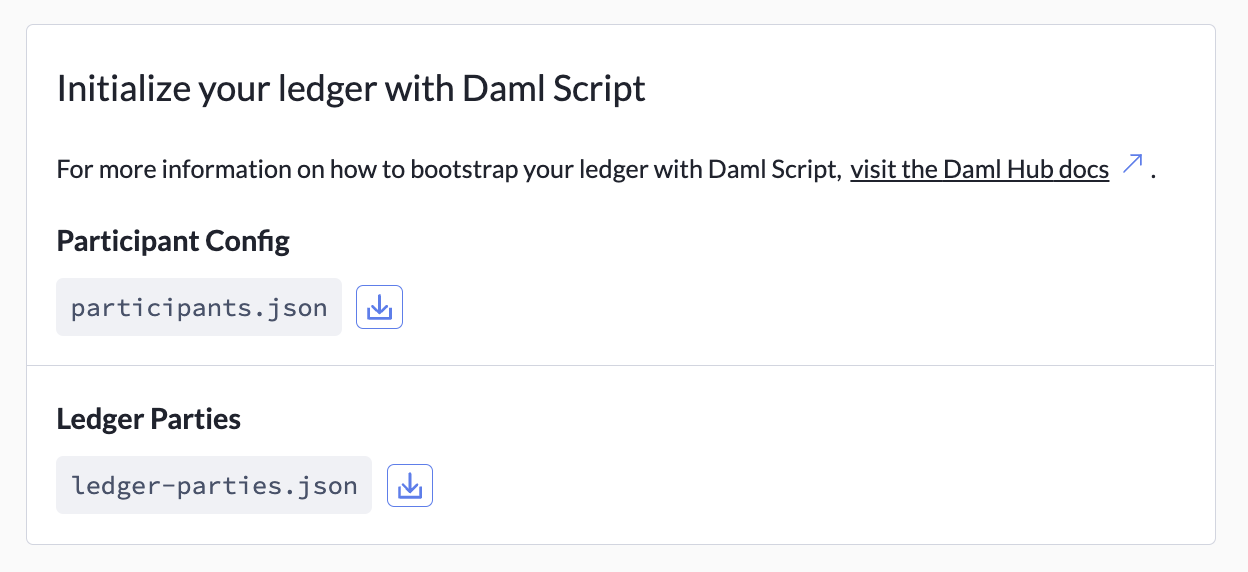

Download the Script’s Ledger Parties from Daml Hub

Next, add the ledger parties from your script to your ledger in the Parties tab of the Identities screen. All this is doing is initializing parties, so there are no contracts yet.

Now, download these parties and their access tokens to use as inputs to your script. Daml Hub produces

a participants.json for you in the Ledger Settings tab.

Add the participants.json file to your script project.

Initialize Ledger Inputs

As the final step, create a file named ledger-parties.json in your script project.

The JSON here must be formatted [party_name] : value, which is the reverse mapping of the party_participants field

in participants.json. Here is an example of the contents of

ledger-parties.json:

{

"intern": "ledger-party-2b645725-41d2-441d-b259-fb8dab0aad11",

"analyst": "ledger-party-0423c1fe-2287-4bd0-a20b-9624b4005d13",

"associate": "ledger-party-581594c7-bd48-489b-a62d-9ec79c214cdf",

"vicePresident": "ledger-party-964ef06c-e7f3-41fe-8517-426797944b00",

"seniorVP": "ledger-party-0ae3d20a-b418-4b10-a20c-6f9ee0410233",

"managingDirector": "ledger-party-dab32e1e-c472-4930-96e5-f105c3303cb5"

}Run the Script Against Your Ledger

Now that you have all the inputs for our Daml Script, you need to run it against the ledger with the following command:

daml script --participant-config participants.json --json-api --dar <Name of .dar that contains the Daml Script> --script-name <Name of Daml Script function> --input-file ledger-parties.jsonThe command consists of participant-config being the participants.json file, the json-api tag because Daml Hub uses

the JSON API, the name of the dar that contains the Daml Script (which in the example would

be .daml/dist/bootstrap-script1-0.0.1.dar), the name of the script function (which in the example would

be Setup:initialize), and the input file ledger-parties.json.

After running this command, return to your Daml Hub ledger, where you can see the contracts created by the Daml script bootstrap process.

For more details on Daml Script, visit the Daml docs.